|

|

Berrisch, J., & Ziel, F. [-@BERRISCH2023105221]. CRPS learning. Journal of Econometrics, 237(2), 105221.

|

|

|

Berrisch, J., & Ziel, F. [-@BERRISCH20241568]. Multivariate probabilistic CRPS learning with an application to day-ahead electricity prices. International Journal of Forecasting, 40(4), 1568–1586.

|

|

|

Hirsch, S., Berrisch, J., & Ziel, F. [-@hirsch2024online]. Online Distributional Regression. arXiv preprint arXiv:2407.08750.

|

|

|

Berrisch, J., & Ziel, F. [-@berrisch2022distributional]. Distributional modeling and forecasting of natural gas prices. Journal of Forecasting, 41(6), 1065–1086.

|

|

|

Berrisch, J., Pappert, S., Ziel, F., & Arsova, A. [-@berrisch2023modeling]. Modeling volatility and dependence of European carbon and energy prices. Finance Research Letters, 52, 103503.

|

|

|

Berrisch, J., Narajewski, M., & Ziel, F. [-@BERRISCH2023100236]. High-resolution peak demand estimation using generalized additive models and deep neural networks. Energy and AI, 13, 100236.

|

|

|

Berrisch, J. [-@berrisch2025rcpptimer]. rcpptimer: Rcpp Tic-Toc Timer with OpenMP Support. arXiv preprint arXiv:2501.15856.

|

:::

::: {.fragment .fade-in-then-out}

|

|

Berrisch, J., & Ziel, F. [-@BERRISCH2023105221]. CRPS learning. Journal of Econometrics, 237(2), 105221.

|

|

|

Berrisch, J., & Ziel, F. [-@BERRISCH20241568]. Multivariate probabilistic CRPS learning with an application to day-ahead electricity prices. International Journal of Forecasting, 40(4), 1568–1586.

|

|

|

Hirsch, S., Berrisch, J., & Ziel, F. [-@hirsch2024online]. Online Distributional Regression. arXiv preprint arXiv:2407.08750.

|

|

|

Berrisch, J., & Ziel, F. [-@berrisch2022distributional]. Distributional modeling and forecasting of natural gas prices. Journal of Forecasting, 41(6), 1065–1086.

|

|

|

Berrisch, J., Pappert, S., Ziel, F., & Arsova, A. [-@berrisch2023modeling]. Modeling volatility and dependence of European carbon and energy prices. Finance Research Letters, 52, 103503.

|

|

|

Berrisch, J., Narajewski, M., & Ziel, F. [-@BERRISCH2023100236]. High-resolution peak demand estimation using generalized additive models and deep neural networks. Energy and AI, 13, 100236.

|

|

|

Berrisch, J. [-@berrisch2025rcpptimer]. rcpptimer: Rcpp Tic-Toc Timer with OpenMP Support. arXiv preprint arXiv:2501.15856.

|

:::

::: {.fragment .fade-in-then-out}

|

|

Berrisch, J., & Ziel, F. [-@BERRISCH2023105221]. CRPS learning. Journal of Econometrics, 237(2), 105221.

|

|

|

Berrisch, J., & Ziel, F. [-@BERRISCH20241568]. Multivariate probabilistic CRPS learning with an application to day-ahead electricity prices. International Journal of Forecasting, 40(4), 1568–1586.

|

|

|

Hirsch, S., Berrisch, J., & Ziel, F. [-@hirsch2024online]. Online Distributional Regression. arXiv preprint arXiv:2407.08750.

|

|

|

Berrisch, J., & Ziel, F. [-@berrisch2022distributional]. Distributional modeling and forecasting of natural gas prices. Journal of Forecasting, 41(6), 1065–1086.

|

|

|

Berrisch, J., Pappert, S., Ziel, F., & Arsova, A. [-@berrisch2023modeling]. Modeling volatility and dependence of European carbon and energy prices. Finance Research Letters, 52, 103503.

|

|

|

Berrisch, J., Narajewski, M., & Ziel, F. [-@BERRISCH2023100236]. High-resolution peak demand estimation using generalized additive models and deep neural networks. Energy and AI, 13, 100236.

|

|

|

Berrisch, J. [-@berrisch2025rcpptimer]. rcpptimer: Rcpp Tic-Toc Timer with OpenMP Support. arXiv preprint arXiv:2501.15856.

|

:::

:::

## Overview

:::: {.columns}

::: {.column width="48%"}

#### Online Distributional Regression

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

#### Distributional Modeling and Forecasting of Natural Gas Prices

:::

::::

:::: {.columns}

::: {.column width="48%"}

void main(){

Rcpp::Timer timer;

timer.tic();

// Code to be timed

timer.toc();

}

```

:::

::::

# CRPS Learning {#sec-crps-learning visibility="uncounted"}

Berrisch, J., & Ziel, F. [-@BERRISCH2023105221]. *Journal of Econometrics*, 237(2), 105221.

## Introduction

:::: {.columns}

::: {.column width="48%"}

### The Idea:

- Combine multiple forecasts instead of choosing one

- Combination weights may vary over **time**, over the **distribution** or **both**

2 Popular options for combining distributions:

- Combining across quantiles (this paper)

- Horizontal aggregation, vincentization

- Combining across probabilities

- Vertical aggregation

- Combining at an angle

- @taylor2023angular

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

::: {.panel-tabset}

## Time

```{r, echo = FALSE, fig.height=8, cache = TRUE}

par(mfrow = c(3, 3), mar = c(2, 2, 2, 2))

set.seed(1)

# Data

X <- matrix(ncol = 3, nrow = 15)

X[, 1] <- seq(from = 8, to = 12, length.out = 15) + 0.25 * rnorm(15)

X[, 2] <- 10 + 0.25 * rnorm(15)

X[, 3] <- seq(from = 12, to = 8, length.out = 15) + 0.25 * rnorm(15)

# Weights

w <- matrix(ncol = 3, nrow = 15)

w[, 1] <- sin(0.1 * 1:15)

w[, 2] <- cos(0.1 * 1:15)

w[, 3] <- seq(from = -2, 0.25, length.out = 15)^2

w <- (w / rowSums(w))

# Vis

plot(X[, 1],

lwd = 4,

type = "l",

ylim = c(8, 12),

xlab = "",

ylab = "",

xaxt = "n",

yaxt = "n",

bty = "n",

col = "#80C684FF"

)

plot(w[, 1],

lwd = 4, type = "l",

ylim = c(0, 1),

xlab = "",

ylab = "", xaxt = "n", yaxt = "n", bty = "n", col = "#80C684FF"

)

text(6, 0.5, TeX("$w_1(t)$"), cex = 2, col = "#80C684FF")

arrows(13, 0.25, 15, 0.0, , lwd = 4, bty = "n", col = "#414141FF")

plot.new()

plot.new()

plot.new()

text(6, 0.6, TeX("$w_2(t)$"), cex = 2, col = "#FFD44EFF")

arrows(13, 0.5, 15, 0.5, , lwd = 4, bty = "n", col = "#414141FF")

plot(rowSums(X * w), lwd = 4, type = "l", xlab = "", ylab = "", xaxt = "n", yaxt = "n", bty = "n", col = "#D81A5FFF")

plot(X[, 3],

lwd = 4,

type = "l", ylim = c(8, 12),

xlab = "", ylab = "", xaxt = "n", yaxt = "n", bty = "n", col = "#FFD44EFF"

)

plot(w[, 3],

lwd = 4, type = "l",

ylim = c(0, 1),

xlab = "",

ylab = "", xaxt = "n", yaxt = "n", bty = "n", col = "#FFD44EFF"

)

text(6, 0.25, TeX("$w_2(t)$"), cex = 2, col = "#FFD44EFF")

arrows(13, 0.75, 15, 1, , lwd = 4, bty = "n", col = "#414141FF")

```

## Distribution

```{ojs}

d3 = require("d3@7")

```

```{ojs}

cdf_data = FileAttachment("assets/crps_learning/weights_plot/cdf_data.csv").csv({ typed: true })

```

```{ojs}

function updateChartInner(g, x, y, linesGroup, color, line, data) {

// Update axes with transitions

x.domain(d3.extent(data, d => d.x));

g.select(".x-axis").transition().duration(1500).call(d3.axisBottom(x).ticks(10));

y.domain([0, d3.max(data, d => d.y)]);

g.select(".y-axis").transition().duration(1500).call(d3.axisLeft(y).ticks(5));

// Group data by basis function

const dataByFunction = Array.from(d3.group(data, d => d.b));

const keyFn = d => d[0];

// Update basis function lines

const u = linesGroup.selectAll("path").data(dataByFunction, keyFn);

u.join(

enter => enter.append("path").attr("fill","none").attr("stroke-width",3)

.attr("stroke", (_, i) => color(i)).attr("d", d => line(d[1].map(pt => ({x: pt.x, y: 0}))))

.style("opacity",0),

update => update,

exit => exit.transition().duration(1000).style("opacity",0).remove()

)

.transition().duration(1000)

.attr("d", d => line(d[1]))

.attr("stroke", (_, i) => color(i))

.style("opacity",1);

}

chart = {

// State variable for selected mu parameter

let selectedMu = 1;

const filteredData = () => cdf_data.filter(d =>

Math.abs(selectedMu - d.mu) < 0.001

);

const container = d3.create("div")

.style("max-width", "none")

.style("width", "100%");

const controlsContainer = container.append("div")

.style("display", "flex")

.style("gap", "20px")

.style("align-items", "center");

// Single slider control for mu

const sliderContainer = controlsContainer.append('div')

.style('display','flex')

.style('align-items','center')

.style('gap','10px')

.style('flex','1');

sliderContainer.append('label')

.text('Naive:')

.style('font-size','20px');

const muSlider = sliderContainer.append('input')

.attr('type','range')

.attr('min', 0)

.attr('max', 1)

.attr('step', 0.1)

.property('value', selectedMu)

.on('input', function(event) {

selectedMu = +this.value;

muDisplay.text(selectedMu.toFixed(1));

updateChart(filteredData());

})

.style('width', '100%')

//.style('-webkit-appearance', 'none')

.style('appearance', 'none')

.style('height', '8px')

.style('background', '#BDBDBDFF');

const muDisplay = sliderContainer.append('span')

.text(selectedMu.toFixed(1))

.style('font-size','20px');

// Add Reset button

controlsContainer.append('button')

.text('Reset')

.style('font-size', '20px')

.style('align-self', 'center')

.style('margin-left', 'auto')

.on('click', () => {

selectedMu = 1;

muSlider.property('value', selectedMu);

muDisplay.text(selectedMu.toFixed(1));

updateChart(filteredData());

});

// Build SVG

const width = 600;

const height = 450;

const margin = {top: 40, right: 20, bottom: 40, left: 40};

const innerWidth = width - margin.left - margin.right;

const innerHeight = height - margin.top - margin.bottom;

// Set controls container width to match SVG plot width

controlsContainer.style("max-width", "none").style("width", "100%");

// Distribute each control evenly and make sliders full-width

controlsContainer.selectAll("div").style("flex", "1").style("min-width", "0px");

controlsContainer.selectAll("input").style("width", "100%").style("box-sizing", "border-box");

// Create scales

const x = d3.scaleLinear()

.range([0, innerWidth]);

const y = d3.scaleLinear()

.range([innerHeight, 0]);

// Create a color scale for the basis functions

const color = d3.scaleOrdinal(["#80C684FF", "#FFD44EFF", "#D81A5FFF"]);

// Create SVG

const svg = d3.create("svg")

.attr("width", "100%")

.attr("height", "auto")

.attr("viewBox", [0, 0, width, height])

.attr("preserveAspectRatio", "xMidYMid meet")

.attr("style", "max-width: 100%; height: auto;");

// Create the chart group

const g = svg.append("g")

.attr("transform", `translate(${margin.left},${margin.top})`);

// Add axes

const xAxis = g.append("g")

.attr("transform", `translate(0,${innerHeight})`)

.attr("class", "x-axis")

.call(d3.axisBottom(x).ticks(10))

.style("font-size", "20px");

const yAxis = g.append("g")

.attr("class", "y-axis")

.call(d3.axisLeft(y).ticks(5))

.style("font-size", "20px");

// Add a horizontal line at y = 0

g.append("line")

.attr("x1", 0)

.attr("x2", innerWidth)

.attr("y1", y(0))

.attr("y2", y(0))

.attr("stroke", "#000")

.attr("stroke-opacity", 0.2);

// Add gridlines

g.append("g")

.attr("class", "grid-lines")

.selectAll("line")

.data(y.ticks(5))

.join("line")

.attr("x1", 0)

.attr("x2", innerWidth)

.attr("y1", d => y(d))

.attr("y2", d => y(d))

.attr("stroke", "#ccc")

.attr("stroke-opacity", 0.5);

// Create a line generator

const line = d3.line()

.x(d => x(d.x))

.y(d => y(d.y))

.curve(d3.curveBasis);

// Group to contain the basis function lines

const linesGroup = g.append("g")

.attr("class", "basis-functions");

// Store the current basis functions for transition

let currentBasisFunctions = new Map();

// Function to update the chart with new data

function updateChart(data) {

updateChartInner(g, x, y, linesGroup, color, line, data);

}

// Store the update function

svg.node().update = updateChart;

// Initial render

updateChart(filteredData());

container.node().appendChild(svg.node());

return container.node();

}

```

:::

:::

::::

## The Framework of Prediction under Expert Advice

###

:::: {.columns}

::: {.column width="48%"}

Each day, $t = 1, 2, ... T$

- The **forecaster** receives predictions $\widehat{X}_{t,k}$ from $K$ **experts**

- The **forecaster** assings weights $w_{t,k}$ to each **expert**

- The **forecaster** calculates her prediction:

\begin{equation}

\widetilde{X}_{t} = \sum_{k=1}^K w_{t,k} \widehat{X}_{t,k}.

\label{eq_forecast_def}

\end{equation}

- The realization for $t$ is observed

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

- The experts can be institutions, persons, or models

- The forecasts can be point-forecasts (i.e., mean or median) or full predictive distributions

- We do not need a distributional assumption concerning the underlying data

- @cesa2006prediction

:::

::::

---

## The Regret

Weights are updated sequentially according to the past performance of the $K$ experts.

That is, a loss function $\ell$ is needed. This is used to compute the **cumulative regret** $R_{t,k}$

\begin{equation}

R_{t,k} = \widetilde{L}_{t} - \widehat{L}_{t,k} = \sum_{i = 1}^t \ell(\widetilde{X}_{i},Y_i) - \ell(\widehat{X}_{i,k},Y_i)\label{eq:regret}

\end{equation}

The cumulative regret:

- Indicates the predictive accuracy of the expert $k$ until time $t$.

- Measures how much the forecaster *regrets* not having followed the expert's advice

Popular loss functions for point forecasting @gneiting2011making:

:::: {.columns}

::: {.column width="48%"}

$\ell_2$ loss:

\begin{equation}

\ell_2(x, y) = | x -y|^2 \label{eq:elltwo}

\end{equation}

Strictly proper for *mean* prediction

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

$\ell_1$ loss:

\begin{equation}

\ell_1(x, y) = | x -y| \label{eq:ellone}

\end{equation}

Strictly proper for *median* predictions

:::

::::

## Popular Algorithms and the Risk

:::: {.columns}

::: {.column width="48%"}

### Popular Aggregation Algorithms

#### The naive combination

\begin{equation}

w_{t,k}^{\text{Naive}} = \frac{1}{K}\label{eq:naive_combination}

\end{equation}

#### The exponentially weighted average forecaster (EWA)

\begin{equation}

\begin{aligned}

w_{t,k}^{\text{EWA}} & = \frac{e^{\eta R_{t,k}} }{\sum_{k = 1}^K e^{\eta R_{t,k}}}\\

& =

\frac{e^{-\eta \ell(\widehat{X}_{t,k},Y_t)} w^{\text{EWA}}_{t-1,k} }{\sum_{k = 1}^K e^{-\eta \ell(\widehat{X}_{t,k},Y_t)} w^{\text{EWA}}_{t-1,k}}

\end{aligned}\label{eq:exp_combination}

\end{equation}

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

### Optimality

In stochastic settings, the cumulative Risk should be analyzed @wintenberger2017optimal:

\begin{align}

&\underbrace{\widetilde{\mathcal{R}}_t = \sum_{i=1}^t \mathbb{E}[\ell(\widetilde{X}_{i},Y_i)|\mathcal{F}_{i-1}]}_{\text{Cumulative Risk of Forecaster}} \\

&\underbrace{\widehat{\mathcal{R}}_{t,k} = \sum_{i=1}^t \mathbb{E}[\ell(\widehat{X}_{i,k},Y_i)|\mathcal{F}_{i-1}]}_{\text{Cumulative Risk of Experts}}

\label{eq_def_cumrisk}

\end{align}

:::

::::

## Optimal Convergence

:::: {.columns}

::: {.column width="48%"}

### The selection problem

\begin{equation}

\frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\min} \right) \stackrel{t\to \infty}{\rightarrow} a \quad \text{with} \quad a \leq 0.

\label{eq_opt_select}

\end{equation}

The forecaster is asymptotically not worse than the best expert.

### The convex aggregation problem

\begin{equation}

\frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\pi} \right) \stackrel{t\to \infty}{\rightarrow} b \quad \text{with} \quad b \leq 0 .

\label{eq_opt_conv}

\end{equation}

The forecaster is asymptotically not worse than the best convex combination in hindsight (**oracle**).

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

Optimal rates with respect to selection \eqref{eq_opt_select} and convex aggregation \eqref{eq_opt_conv} @wintenberger2017optimal:

\begin{align}

\frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\min} \right) & =

\mathcal{O}\left(\frac{\log(K)}{t}\right)\label{eq_optp_select}

\end{align}

\begin{align}

\frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\pi} \right) & =

\mathcal{O}\left(\sqrt{\frac{\log(K)}{t}}\right)

\label{eq_optp_conv}

\end{align}

Algorithms can statisfy both \eqref{eq_optp_select} and \eqref{eq_optp_conv} depending on:

- The loss function

- Regularity conditions on $Y_t$ and $\widehat{X}_{t,k}$

- The weighting scheme

:::

::::

##

:::: {.columns}

::: {.column width="48%"}

### Optimal Convergence

EWA satisfies optimal selection convergence \eqref{eq_optp_select} in a deterministic setting if:

- Loss $\ell$ is exp-concave

- Learning-rate $\eta$ is chosen correctly

Those results can be converted to any stochastic setting @wintenberger2017optimal.

Optimal convex aggregation convergence \eqref{eq_optp_conv} can be satisfied by applying the kernel-trick:

\begin{align}

\ell^{\nabla}(x,y) = \ell'(\widetilde{X},y) x

\end{align}

$\ell'$ is the subgradient of $\ell$ at forecast combination $\widetilde{X}$.

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

### Probabilistic Setting

**An appropriate choice:**

\begin{equation*}

\text{CRPS}(F, y) = \int_{\mathbb{R}} {(F(x) - \mathbb{1}\{ x > y \})}^2 dx \label{eq:crps}

\end{equation*}

It's strictly proper [@gneiting2007strictly].

Using the CRPS, we can calculate time-adaptive weights $w_{t,k}$. However, what if the experts' performance varies in parts of the distribution?

Utilize this relation:

\begin{equation*}

\text{CRPS}(F, y) = 2 \int_0^{1} \text{QL}_p(F^{-1}(p), y) dp.\label{eq_crps_qs}

\end{equation*}

... to combine quantiles of the probabilistic forecasts individually using the quantile-loss QL.

:::

::::

## CRPS Learning Optimality

::: {.panel-tabset}

## Almost Optimal Convergence

:::: {style="font-size: 90%;"}

QL is convex, but not exp-concave

Bernstein Online Aggregation (BOA) lets us weaken the exp-concavity condition. It satisfies that there exist a $C>0$ such that for $x>0$ it holds that

\begin{equation}

P\left( \frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\pi} \right) \leq C \log(\log(t)) \left(\sqrt{\frac{\log(K)}{t}} + \frac{\log(K)+x}{t}\right) \right) \geq

1-e^{-x}

\label{eq_boa_opt_conv}

\end{equation}

Almost optimal w.r.t. *convex aggregation* \eqref{eq_optp_conv} @wintenberger2017optimal.

The same algorithm satisfies that there exist a $C>0$ such that for $x>0$ it holds that

\begin{equation}

P\left( \frac{1}{t}\left(\widetilde{\mathcal{R}}_t - \widehat{\mathcal{R}}_{t,\min} \right) \leq

C\left(\frac{\log(K)+\log(\log(Gt))+ x}{\alpha t}\right)^{\frac{1}{2-\beta}} \right) \geq

1-2e^{-x}

\label{eq_boa_opt_select}

\end{equation}

if $Y_t$ is bounded, the considered loss $\ell$ is convex, $G$-Lipschitz, and weak exp-concave in its first coordinate.

Almost optimal w.r.t. *selection* \eqref{eq_optp_select} @gaillard2018efficient.

We show that this holds for QL under feasible conditions.

:::

## Conditions + Lemma

:::: {.columns}

::: {.column width="48%"}

**Lemma 1**

\begin{align}

2\overline{\widehat{\mathcal{R}}}^{\text{QL}}_{t,\min}

& \leq \widehat{\mathcal{R}}^{\text{CRPS}}_{t,\min}

\label{eq_risk_ql_crps_expert} \\

2\overline{\widehat{\mathcal{R}}}^{\text{QL}}_{t,\pi}

& \leq \widehat{\mathcal{R}}^{\text{CRPS}}_{t,\pi} .

\label{eq_risk_ql_crps_convex}

\end{align}

Pointwise can outperform constant procedures

QL is convex but not exp-concave:

Almost optimal convergence w.r.t. *convex aggregation* \eqref{eq_boa_opt_conv}

For almost optimal congerence w.r.t. *selection* \eqref{eq_boa_opt_select} we need to check **A1** and **A2**:

QL is Lipschitz continuous:

**A1** holds

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

**A1**

For some $G>0$ it holds

for all $x_1,x_2\in \mathbb{R}$ and $t>0$ that

$$ | \ell(x_1, Y_t)-\ell(x_2, Y_t) | \leq G |x_1-x_2|$$

**A2** For some $\alpha>0$, $\beta\in[0,1]$ it holds

for all $x_1,x_2 \in \mathbb{R}$ and $t>0$ that

\begin{align*}

\mathbb{E}[

& \ell(x_1, Y_t)-\ell(x_2, Y_t) | \mathcal{F}_{t-1}] \leq \\

& \mathbb{E}[ \ell'(x_1, Y_t)(x_1 - x_2) |\mathcal{F}_{t-1}] \\

& +

\mathbb{E}\left[ \left. \left( \alpha(\ell'(x_1, Y_t)(x_1 - x_2))^{2}\right)^{1/\beta} \right|\mathcal{F}_{t-1}\right]

\end{align*}

Almost optimal w.r.t. *selection* \eqref{eq_optp_select} @gaillard2018efficient.

:::

::::

## Proposition + Theorem

:::: {.columns}

::: {.column width="48%"}

Conditional quantile risk: $\mathcal{Q}_p(x) = \mathbb{E}[ \text{QL}_p(x, Y_t) | \mathcal{F}_{t-1}]$.

convexity properties of $\mathcal{Q}_p$ depend on the

conditional distribution $Y_t|\mathcal{F}_{t-1}$.

**Proposition 1**

Let $Y$ be a univariate random variable with (Radon-Nikodym) $\nu$-density $f$, then for the second subderivative of the quantile risk

$\mathcal{Q}_p(x) = \mathbb{E}[ \text{QL}_p(x, Y) ]$

of $Y$ it holds for all $p\in(0,1)$ that

$\mathcal{Q}_p'' = f.$

Additionally, if $f$ is a continuous Lebesgue-density with $f\geq\gamma>0$ for some constant $\gamma>0$ on its support $\text{spt}(f)$ then

is $\mathcal{Q}_p$ is $\gamma$-strongly convex.

Strong convexity with $\beta=1$ implies weak exp-concavity **A2** @gaillard2018efficient

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

**A1** and **A2** give us almost optimal convergence w.r.t. selection \eqref{eq_boa_opt_select}

**Theorem 1**

The gradient based fully adaptive Bernstein online aggregation (BOAG) applied pointwise for all $p\in(0,1)$ on $\text{QL}$ satisfies

\eqref{eq_boa_opt_conv} with minimal CRPS given by

$$\widehat{\mathcal{R}}_{t,\pi} = 2\overline{\widehat{\mathcal{R}}}^{\text{QL}}_{t,\pi}.$$

If $Y_t|\mathcal{F}_{t-1}$ is bounded

and has a pdf $f_t$ satifying $f_t>\gamma >0$ on its

support $\text{spt}(f_t)$ then \ref{eq_boa_opt_select} holds with $\beta=1$ and

$$\widehat{\mathcal{R}}_{t,\min} = 2\overline{\widehat{\mathcal{R}}}^{\text{QL}}_{t,\min}$$.

:::

::::

::::

:::: {.notes}

We apply Bernstein Online Aggregation (BOA). It lets us weaken the exp-concavity condition while almost keeping the optimalities \ref{eq_optp_select} and \ref{eq_optp_conv}.

::::

## A Probabilistic Example

:::: {.columns}

::: {.column width="48%"}

Simple Example:

\begin{align}

Y_t & \sim \mathcal{N}(0,\,1) \\

\widehat{X}_{t,1} & \sim \widehat{F}_{1} = \mathcal{N}(-1,\,1) \\

\widehat{X}_{t,2} & \sim \widehat{F}_{2} = \mathcal{N}(3,\,4)

\label{eq:dgp_sim1}

\end{align}

- True weights vary over $p$

- Figures show the ECDF and calculated weights using $T=25$ realizations

- Pointwise solution creates rough estimates

- Pointwise is better than constant

- Smooth solution is better than pointwise

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

::: {.panel-tabset}

## CDFs

```{r, echo = FALSE, fig.width=7, fig.height=6, fig.align='center', cache = TRUE}

source("assets/01_common.R")

load("assets/crps_learning/01_motivation_01.RData")

ggplot(df, aes(x = x, y = y, xend = xend, yend = yend)) +

stat_function(

fun = pnorm, n = 10000,

args = list(mean = dev[2], sd = experts_sd[2]),

aes(col = "Expert 2"), size = 1.5

) +

stat_function(

fun = pnorm, n = 10000,

args = list(mean = dev[1], sd = experts_sd[1]),

aes(col = "Expert 1"), size = 1.5

) +

stat_function(

fun = pnorm,

n = 10000,

size = 1.5, aes(col = "DGP") # , linetype = "dashed"

) +

geom_point(aes(col = "ECDF"), size = 1.5, show.legend = FALSE) +

geom_segment(aes(col = "ECDF")) +

geom_segment(data = tibble(

x_ = -5,

xend_ = min(y),

y_ = 0,

yend_ = 0

), aes(x = x_, xend = xend_, y = y_, yend = yend_)) +

theme_minimal() +

theme(

text = element_text(size = text_size),

legend.position = "bottom",

legend.key.width = unit(1.5, "cm")

) +

ylab("Probability p") +

xlab("Value") +

scale_colour_manual(NULL, values = c("#969696", "#252525", col_auto, col_blue)) +

guides(color = guide_legend(

nrow = 2,

byrow = FALSE # ,

# override.aes = list(

# size = c(1.5, 1.5, 1.5, 1.5)

# )

)) +

scale_x_continuous(limits = c(-5, 7.5))

```

## Weights

```{r, echo = FALSE, fig.width=7, fig.height=6, fig.align='center', cache = TRUE}

source("assets/01_common.R")

load("assets/crps_learning/01_motivation_02.RData")

ggplot() +

geom_line(data = weights[weights$var != "1Optimum", ], size = 1.5, aes(x = prob, y = val, col = var)) +

geom_line(

data = weights[weights$var == "1Optimum", ], size = 1.5, aes(x = prob, y = val, col = var) # , linetype = "dashed"

) +

theme_minimal() +

theme(

text = element_text(size = text_size),

legend.position = "bottom",

legend.key.width = unit(1.5, "cm")

) +

xlab("Probability p") +

ylab("Weight w") +

scale_colour_manual(

NULL,

values = c("#969696", col_pointwise, col_p_constant, col_p_smooth),

labels = modnames[-c(3, 5)]

) +

guides(color = guide_legend(

ncol = 3,

byrow = FALSE,

title.hjust = 5,

# override.aes = list(

# linetype = c(rep("solid", 5), "dashed")

# )

)) +

ylim(c(0, 1))

```

::::

:::

:::

## The Smoothing Procedures

::: {.panel-tabset}

## Penalized Smoothing

:::: {.columns}

::: {.column width="48%"}

Penalized cubic B-Splines for smoothing weights:

Let $\varphi=(\varphi_1,\ldots, \varphi_L)$ be bounded basis functions on $(0,1)$ Then we approximate $w_{t,k}$ by

\begin{align}

w_{t,k}^{\text{smooth}} = \sum_{l=1}^L \beta_l \varphi_l = \beta'\varphi

\end{align}

with parameter vector $\beta$. The latter is estimated to penalize $L_2$-smoothing which minimizes

\begin{equation}

\| w_{t,k} - \beta' \varphi \|^2_2 + \lambda \| \mathcal{D}^{d} (\beta' \varphi) \|^2_2

\label{eq_function_smooth}

\end{equation}

with differential operator $\mathcal{D}$

Computation is easy, since we have an analytical solution

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

We receive the constant solution for high values of $\lambda$ when setting $d=1$

:::

::::

## Basis Smoothing

:::: {.columns}

::: {.column width="48%"}

Represent weights as linear combinations of bounded basis functions:

\begin{equation}

w_{t,k} = \sum_{l=1}^L \beta_{t,k,l} \varphi_l = \boldsymbol \beta_{t,k}' \boldsymbol \varphi

\end{equation}

A popular choice are are B-Splines as local basis functions

$\boldsymbol \beta_{t,k}$ is calculated using a reduced regret matrix:

\begin{equation}

\underbrace{\boldsymbol r_{t}}_{\text{LxK}} = \frac{L}{P} \underbrace{\boldsymbol B'}_{\text{LxP}} \underbrace{\left({\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widetilde{\boldsymbol X}_{t},Y_t)- {\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widehat{\boldsymbol X}_{t},Y_t)\right)}_{\text{PxK}}

\end{equation}

$\boldsymbol r_{t}$ is transformed from PxK to LxK

If $L = P$ it holds that $\boldsymbol \varphi = \boldsymbol{I}$

For $L = 1$ we receive constant weights

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

Weights converge to the constant solution if $L\rightarrow 1$

:::

::::

## Basis Smoothing

:::: {.columns}

::: {.column width="48%"}

Represent weights as linear combinations of bounded basis functions:

\begin{equation}

w_{t,k} = \sum_{l=1}^L \beta_{t,k,l} \varphi_l = \boldsymbol \beta_{t,k}' \boldsymbol \varphi

\end{equation}

A popular choice are are B-Splines as local basis functions

$\boldsymbol \beta_{t,k}$ is calculated using a reduced regret matrix:

\begin{equation}

\underbrace{\boldsymbol r_{t}}_{\text{LxK}} = \frac{L}{P} \underbrace{\boldsymbol B'}_{\text{LxP}} \underbrace{\left({\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widetilde{\boldsymbol X}_{t},Y_t)- {\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widehat{\boldsymbol X}_{t},Y_t)\right)}_{\text{PxK}}

\end{equation}

$\boldsymbol r_{t}$ is transformed from PxK to LxK

If $L = P$ it holds that $\boldsymbol \varphi = \boldsymbol{I}$

For $L = 1$ we receive constant weights

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

Weights converge to the constant solution if $L\rightarrow 1$

:::

::::

::::

---

## The Proposed CRPS-Learning Algorithm

:::

::::

::::

---

## The Proposed CRPS-Learning Algorithm

::: {style="font-size: 85%;"}

:::: {.columns}

::: {.column width="43%"}

### Initialization:

Array of expert predicitons: $\widehat{X}_{t,p,k}$

Vector of Prediction targets: $Y_t$

Starting Weights: $\boldsymbol w_0=(w_{0,1},\ldots, w_{0,K})$

Penalization parameter: $\lambda\geq 0$

B-spline and penalty matrices $\boldsymbol B$ and $\boldsymbol D$ on $\mathcal{P}= (p_1,\ldots,p_M)$

Hat matrix: $$\boldsymbol{\mathcal{H}} = \boldsymbol B(\boldsymbol B'\boldsymbol B+ \lambda (\alpha \boldsymbol D_1'\boldsymbol D_1 + (1-\alpha) \boldsymbol D_2'\boldsymbol D_2))^{-1} \boldsymbol B'$$

Cumulative Regret: $R_{0,k} = 0$

Range parameter: $E_{0,k}=0$

Starting pseudo-weights: $\boldsymbol \beta_0 = \boldsymbol B^{\text{pinv}}\boldsymbol w_0(\boldsymbol{\mathcal{P}})$

:::

::: {.column width="2%"}

:::

::: {.column width="55%"}

### Core:

for( t in 1:T ) {

$\widetilde{\boldsymbol X}_{t} = \text{Sort}\left( \boldsymbol w_{t-1}'(\boldsymbol P) \widehat{\boldsymbol X}_{t} \right)$ # Prediction

$\boldsymbol r_{t} = \frac{L}{M} \boldsymbol B' \left({\boldsymbol{QL}}_{\boldsymbol{\mathcal P}}^{\nabla}(\widetilde{\boldsymbol X}_{t},Y_t)- {\boldsymbol{QL}}_{\boldsymbol{\mathcal P}}^{\nabla}(\widehat{\boldsymbol X}_{t},Y_t)\right)$

$\boldsymbol E_{t} = \max(\boldsymbol E_{t-1}, \boldsymbol r_{t}^+ + \boldsymbol r_{t}^-)$

$\boldsymbol V_{t} = \boldsymbol V_{t-1} + \boldsymbol r_{t}^{ \odot 2}$

$\boldsymbol \eta_{t} =\min\left( \left(-\log(\boldsymbol \beta_{0}) \odot \boldsymbol V_{t}^{\odot -1} \right)^{\odot\frac{1}{2}} , \frac{1}{2}\boldsymbol E_{t}^{\odot-1}\right)$

$\boldsymbol R_{t} = \boldsymbol R_{t-1}+ \boldsymbol r_{t} \odot \left( \boldsymbol 1 - \boldsymbol \eta_{t} \odot \boldsymbol r_{t} \right)/2 + \boldsymbol E_{t} \odot \mathbb{1}\{-2\boldsymbol \eta_{t}\odot \boldsymbol r_{t} > 1\}$

$\boldsymbol \beta_{t} = K \boldsymbol \beta_{0} \odot \boldsymbol {SoftMax}\left( - \boldsymbol \eta_{t} \odot \boldsymbol R_{t} + \log( \boldsymbol \eta_{t}) \right)$

$\boldsymbol w_{t}(\boldsymbol P) = \underbrace{\boldsymbol B(\boldsymbol B'\boldsymbol B+ \lambda (\alpha \boldsymbol D_1'\boldsymbol D_1 + (1-\alpha) \boldsymbol D_2'\boldsymbol D_2))^{-1} \boldsymbol B'}_{\boldsymbol{\mathcal{H}}} \boldsymbol B \boldsymbol \beta_{t}$

}

:::

::::

:::

## Simulation Study

::: {.panel-tabset}

## BOA

:::: {.columns}

::: {.column width="48%"}

Data Generating Process of the [simple probabilistic example](#simple_example):

\begin{align*}

Y_t &\sim \mathcal{N}(0,\,1)\\

\widehat{X}_{t,1} &\sim \widehat{F}_{1}=\mathcal{N}(-1,\,1) \\

\widehat{X}_{t,2} &\sim \widehat{F}_{2}=\mathcal{N}(3,\,4)

\end{align*}

- Constant solution $\lambda \rightarrow \infty$

- Pointwise Solution of the proposed BOAG

- Smoothed Solution of the proposed BOAG

- Weights are smoothed during learning

- Smooth weights are used to calculate Regret, adjust weights, etc.

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

::: {.panel-tabset}

## QL Deviation

Deviation from best attainable QL (1000 runs).

## CRPS vs. Lambda

CRPS Values for different $\lambda$ (1000 runs)

## Knots

CRPS for different number of knots (1000 runs)

::::

:::

::::

## Comparison to EWA and ML-Poly

The same simulation carried out for different algorithms (1000 runs):

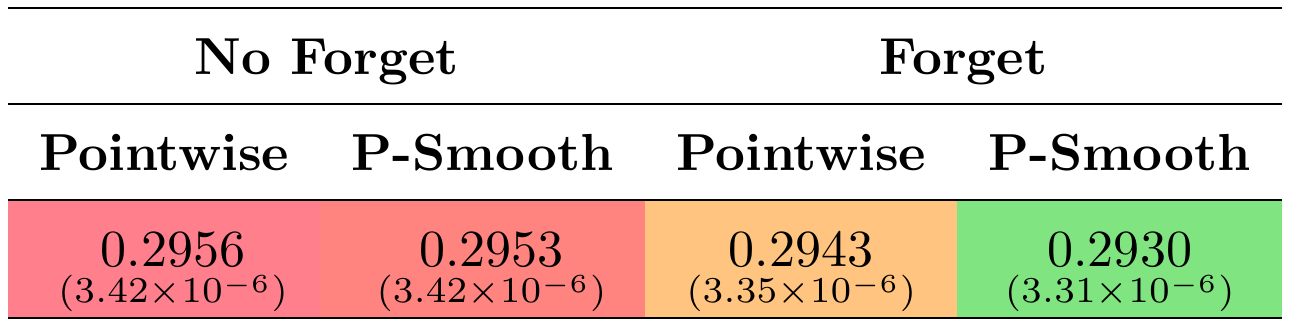

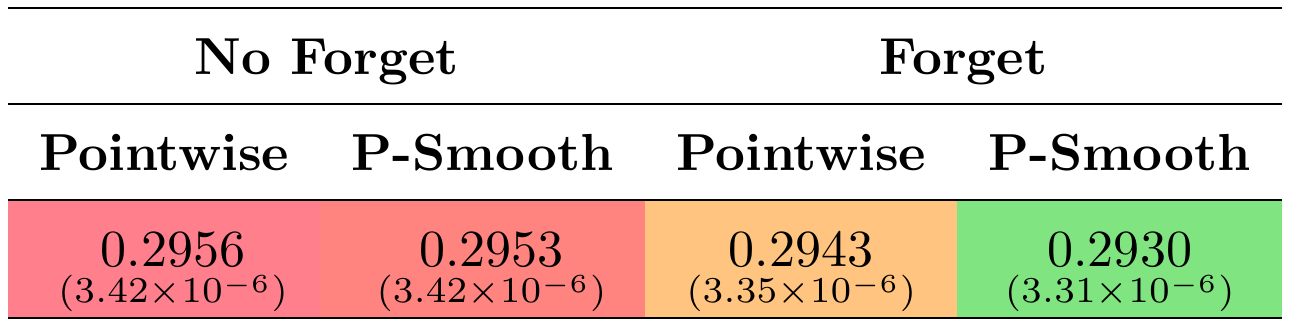

## Study Forget

:::: {.columns}

::: {.column width="38%"}

#### New DGP:

\begin{align*}

Y_t &\sim \mathcal{N}\left(\frac{\sin(0.005 \pi t )}{2},\,1\right) \\

\widehat{X}_{t,1} &\sim \widehat{F}_{1} = \mathcal{N}(-1,\,1) \\

\widehat{X}_{t,2} &\sim \widehat{F}_{2} = \mathcal{N}(3,\,4)

\end{align*}

Changing optimal weights

Single run example depicted aside

No forgetting leads to long-term constant weights

## Study Forget

:::: {.columns}

::: {.column width="38%"}

#### New DGP:

\begin{align*}

Y_t &\sim \mathcal{N}\left(\frac{\sin(0.005 \pi t )}{2},\,1\right) \\

\widehat{X}_{t,1} &\sim \widehat{F}_{1} = \mathcal{N}(-1,\,1) \\

\widehat{X}_{t,2} &\sim \widehat{F}_{2} = \mathcal{N}(3,\,4)

\end{align*}

Changing optimal weights

Single run example depicted aside

No forgetting leads to long-term constant weights

:::

::: {.column width="4%"}

:::

::: {.column width="58%"}

###

```{r, echo = FALSE, fig.width=7, fig.height=5, fig.align='center', cache = TRUE}

load("assets/crps_learning/weights_preprocessed.rda")

mod_labs <- c("Optimum", "No Forget\nPointwise", "No Forget\nP-Smooth", "Forget\nPointwise", "Forget\nP-Smooth")

names(mod_labs) <- c("Optimum", "nf_ptw", "nf_psmth", "f_ptw", "f_psmth")

colseq <- c(grey(.99), "orange", "red", "purple", "blue", "darkblue", "black")

weights_preprocessed %>%

mutate(w = 1 - w) %>%

ggplot(aes(t, p, fill = w)) +

geom_raster(interpolate = TRUE) +

facet_grid(Mod ~ ., labeller = labeller(Mod = mod_labs)) +

theme_minimal() +

theme(

# plot.margin = unit(c(0.5, 0.5, 0.5, 0.5), "cm"),

text = element_text(size = 15),

legend.key.height = unit(1, "inch")

) +

scale_x_continuous(expand = c(0, 0)) +

xlab("Time t") +

scale_fill_gradientn(

limits = c(0, 1),

colours = colseq,

breaks = seq(0, 1, 0.2)

) +

ylab("Weight w")

```

:::

::::

::::

## Application Study

::: {.panel-tabset}

## Overview

:::: {.columns}

::: {.column width="29%"}

::: {style="font-size: 85%;"}

Data:

- Forecasting European emission allowances (EUA)

- Daily month-ahead prices

- Jan 13 - Dec 20 (Phase III, 2092 Obs)

- Rolling Window (length 250 ~ 1 year)

Combination methods:

- Online

- Naive, BOAG, EWAG, ML-PolyG, BMA

- Batch

- QRlin, QRconv

::::

:::

::: {.column width="2%"}

:::

::: {.column width="69%"}

Tuning paramter grids:

- Smoothing Penalty: $\Lambda= \{0\}\cup \{2^x|x\in \{-4,-3.5,\ldots,12\}\}$

- Learning Rates: $\mathcal{E}= \{2^x|x\in \{-1,-0.5,\ldots,9\}\}$

```{r, echo = FALSE, fig.width=10, fig.height=5, fig.align='center', cache = TRUE}

load("assets/crps_learning/overview_data.rds")

data %>%

ggplot(aes(x = Date, y = value)) +

geom_line(size = 1, col = cols[9,"blue"]) +

theme_minimal() +

ylab("Value") +

facet_wrap(. ~ name, scales = "free", ncol = 1) +

theme(

text = element_text(size = 15),

strip.background = element_blank(),

strip.text.x = element_blank()

) -> p1

data %>%

ggplot(aes(x = value)) +

geom_histogram(aes(y = ..density..), size = 1, fill = cols[9,"blue"], bins = 50) +

ylab("Density") +

xlab("Value") +

theme_minimal() +

theme(

strip.background = element_rect(fill = cols[3,"grey"], colour = cols[3,"grey"]),

text = element_text(size = text_size)

) +

facet_wrap(. ~ name, scales = "free", ncol = 1, strip.position = "right") -> p2

overview <- cowplot::plot_grid(plotlist = list(p1, p2), align = "hv", axis = "tblr", rel_widths = c(0.65, 0.35))

overview

```

:::

::::

## Experts

::: {style="font-size: 90%;"}

Simple exponential smoothing with additive errors (**ETS-ANN**):

\begin{align*}

Y_{t} = l_{t-1} + \varepsilon_t \quad \text{with} \quad l_t = l_{t-1} + \alpha \varepsilon_t \quad \text{and} \quad \varepsilon_t \sim \mathcal{N}(0,\sigma^2)

\end{align*}

Quantile regression (**QuantReg**): For each $p \in \mathcal{P}$ we assume:

\begin{align*}

F^{-1}_{Y_t}(p) = \beta_{p,0} + \beta_{p,1} Y_{t-1} + \beta_{p,2} |Y_{t-1}-Y_{t-2}|

\end{align*}

ARIMA(1,0,1)-GARCH(1,1) with Gaussian errors (**ARMA-GARCH**):

\begin{align*}

Y_{t} = \mu + \phi(Y_{t-1}-\mu) + \theta \varepsilon_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \sigma_t^2 = \omega + \alpha \varepsilon_{t-1}^2 + \beta \sigma_{t-1}^2 \quad \text{and} \quad Z_t \sim \mathcal{N}(0,1)

\end{align*}

ARIMA(0,1,0)-I-EGARCH(1,1) with Gaussian errors (**I-EGARCH**):

\begin{align*}

Y_{t} = \mu + Y_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \log(\sigma_t^2) = \omega + \alpha Z_{t-1}+ \gamma (|Z_{t-1}|-\mathbb{E}|Z_{t-1}|) + \beta \log(\sigma_{t-1}^2) \quad \text{and} \quad Z_t \sim \mathcal{N}(0,1)

\end{align*}

ARIMA(0,1,0)-GARCH(1,1) with student-t errors (**I-GARCHt**):

\begin{align*}

Y_{t} = \mu + Y_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \sigma_t^2 = \omega + \alpha \varepsilon_{t-1}^2 + \beta \sigma_{t-1}^2 \quad \text{and} \quad Z_t \sim t(0,1, \nu)

\end{align*}

::::

## Results

::: {.panel-tabset}

## Significance

```{r, echo = FALSE, fig.width=7, fig.height=5.5, fig.align='center', cache = TRUE, results='asis'}

load("assets/crps_learning/bernstein_application_study_estimations+learnings_rev1.RData")

quantile_loss <- function(X, y, tau) {

t(t(y - X) * tau) * (y - X > 0) + t(t(X - y) * (1 - tau)) * (y - X < 0)

}

QL <- FCSTN * NA

for (k in 1:dim(QL)[1]) {

QL[k, , ] <- quantile_loss(FCSTN[k, , ], as.numeric(yoos), Qgrid)

}

## TABLE AREA

KK <- length(mnames)

TTinit <- 1 ## without first, as all comb. are uniform

RQL <- apply(QL[1:KK, -c(1:TTinit), ], c(1, 3), mean)

dimnames(RQL) <- list(mnames, Qgrid)

RQLm <- apply(RQL, c(1), mean, na.rm = TRUE)

##

qq <- apply(QL[1:KK, -c(1:TTinit), ], c(1, 2), mean)

library(xtable)

Pall <- numeric(KK)

for (i in 1:KK) Pall[i] <- t.test(qq[K + 1, ] - qq[i, ], alternative = "greater")$p.val

Mall <- (RQLm - RQLm[K + 1]) * 10000

Mout <- matrix(Mall[-c(1:(K + 3))], 5, 6)

dimnames(Mout) <- list(moname, mtname)

Pallout <- format(round(Pall, 3), nsmall = 3)

Pallout[Pallout == "0.000"] <- "<.001"

Pallout[Pallout == "1.000"] <- ">.999"

MO <- K

IDX <- c(1:K)

OUT <- t(Mall[IDX])

OUT.num <- OUT

class(OUT.num) <- "numeric"

xxx <- OUT.num

xxxx <- OUT

table <- round(OUT, 3)

table_col <- OUT

i.p <- 1

for (i.p in 1:MO) {

xmax <- -min(Mall) * 5 # max(Mall)

xmin <- min(Mall)

cred <- rev(c(1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, .8, .5)) # , .5,0,0,0,1,1,1) ## red

cgreen <- rev(c(.5, .5, .55, .6, .65, .7, .75, .8, .85, .9, .95, 1, 1, .9)) # , .5,0,1,1,1,0,0) ## green

cblue <- rev(c(.55, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5)) # , .5,1,1,0,0,0,1) ## blue

crange <- c(xmin, xmax) ## range

## colors in plot:

fred <- round(approxfun(seq(crange[1], crange[2], length = length(cred)), cred)(pmin(xxx[, i.p], xmax)), 3)

fgreen <- round(approxfun(seq(crange[1], crange[2], length = length(cgreen)), cgreen)(pmin(xxx[, i.p], xmax)), 3)

fblue <- round(approxfun(seq(crange[1], crange[2], length = length(cblue)), cblue)(pmin(xxx[, i.p], xmax)), 3)

tmp <- format(round(xxx[, i.p], 3), nsmall = 3)

xxxx[, i.p] <- paste("\\cellcolor[rgb]{", fred, ",", fgreen, ",", fblue, "}", tmp, " {\\footnotesize (", Pallout[IDX[i.p]], ")}", sep = "")

table_col[, i.p] <- rgb(fred, fgreen, fblue, maxColorValue = 1)

table[, i.p] <- paste0(

table[, i.p],

'(', Pallout[i.p], ")"

)

}

table_out <- kbl(

table,

align = rep("c", ncol(table)),

bootstrap_options = c("condensed"),

escape = FALSE) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:nrow(table), color = cols[9, "grey"])

for (j in 1:ncol(table)) {

table_out <- table_out %>%

column_spec(j, background = table_col[, j])

}

table_out

```

```{r, echo = FALSE, fig.width=7, fig.height=5.5, fig.align='center', cache = TRUE, results='asis'}

MO <- 6

OUT <- Mout

OUT.num <- OUT

class(OUT.num) <- "numeric"

xxx <- OUT.num

xxxx <- OUT

i.p <- 1

table2 <- OUT

table_col2 <- OUT

for (i.p in 1:MO) {

xmax <- -min(Mall) * 5 # max(Mall)

xmin <- min(Mall)

cred <- rev(c(1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, .8, .5)) # , .5,0,0,0,1,1,1) ## red

cgreen <- rev(c(.5, .5, .55, .6, .65, .7, .75, .8, .85, .9, .95, 1, 1, .9)) # , .5,0,1,1,1,0,0) ## green

cblue <- rev(c(.55, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5)) # , .5,1,1,0,0,0,1) ## blue

crange <- c(xmin, xmax) ## range

## colors in plot:

fred <- round(approxfun(seq(crange[1], crange[2], length = length(cred)), cred)(pmin(xxx[, i.p], xmax)), 3)

fgreen <- round(approxfun(seq(crange[1], crange[2], length = length(cgreen)), cgreen)(pmin(xxx[, i.p], xmax)), 3)

fblue <- round(approxfun(seq(crange[1], crange[2], length = length(cblue)), cblue)(pmin(xxx[, i.p], xmax)), 3)

tmp <- format(round(xxx[, i.p], 3), nsmall = 3)

xxxx[, i.p] <- paste("\\cellcolor[rgb]{", fred, ",", fgreen, ",", fblue, "}", tmp, " {\\footnotesize (", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")}", sep = "")

# table2[, i.p] <- paste0(tmp, " (", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")")

table2[, i.p] <- paste0(

tmp,

"(", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")"

)

table_col2[, i.p] <- rgb(fred, fgreen, fblue, maxColorValue = 1)

} # i.p

rownames(table2) <- c("Pointwise", "B-Smooth", "P-Smooth", "B-Constant", "P-Constant")

rownames(table_col2) <- rownames(table2)

table2["B-Smooth", c("QRlin", "QRconv")] <- "-"

table_col2["B-Smooth", c("QRlin", "QRconv")] <- cols[2, "grey"]

idx <- c("Pointwise", "B-Constant", "P-Constant", "B-Smooth", "P-Smooth")

table2["P-Smooth", "BOAG"] <- "-0.182(0.039)"

table_out2 <- kableExtra::kbl(

table2[idx, ],

align = rep("c", ncol(table2)),

bootstrap_options = c("condensed"),

escape = FALSE

) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:nrow(table2[idx, ]), color = cols[9, "grey"])

for (j in seq_len(ncol(table2[idx, ]))) {

table_out2 <- table_out2 %>%

column_spec(1 + j,

background = table_col2[idx, j]

)

}

table_out2 %>%

column_spec(1, bold = T)

```

:::

::: {.column width="4%"}

:::

::: {.column width="58%"}

###

```{r, echo = FALSE, fig.width=7, fig.height=5, fig.align='center', cache = TRUE}

load("assets/crps_learning/weights_preprocessed.rda")

mod_labs <- c("Optimum", "No Forget\nPointwise", "No Forget\nP-Smooth", "Forget\nPointwise", "Forget\nP-Smooth")

names(mod_labs) <- c("Optimum", "nf_ptw", "nf_psmth", "f_ptw", "f_psmth")

colseq <- c(grey(.99), "orange", "red", "purple", "blue", "darkblue", "black")

weights_preprocessed %>%

mutate(w = 1 - w) %>%

ggplot(aes(t, p, fill = w)) +

geom_raster(interpolate = TRUE) +

facet_grid(Mod ~ ., labeller = labeller(Mod = mod_labs)) +

theme_minimal() +

theme(

# plot.margin = unit(c(0.5, 0.5, 0.5, 0.5), "cm"),

text = element_text(size = 15),

legend.key.height = unit(1, "inch")

) +

scale_x_continuous(expand = c(0, 0)) +

xlab("Time t") +

scale_fill_gradientn(

limits = c(0, 1),

colours = colseq,

breaks = seq(0, 1, 0.2)

) +

ylab("Weight w")

```

:::

::::

::::

## Application Study

::: {.panel-tabset}

## Overview

:::: {.columns}

::: {.column width="29%"}

::: {style="font-size: 85%;"}

Data:

- Forecasting European emission allowances (EUA)

- Daily month-ahead prices

- Jan 13 - Dec 20 (Phase III, 2092 Obs)

- Rolling Window (length 250 ~ 1 year)

Combination methods:

- Online

- Naive, BOAG, EWAG, ML-PolyG, BMA

- Batch

- QRlin, QRconv

::::

:::

::: {.column width="2%"}

:::

::: {.column width="69%"}

Tuning paramter grids:

- Smoothing Penalty: $\Lambda= \{0\}\cup \{2^x|x\in \{-4,-3.5,\ldots,12\}\}$

- Learning Rates: $\mathcal{E}= \{2^x|x\in \{-1,-0.5,\ldots,9\}\}$

```{r, echo = FALSE, fig.width=10, fig.height=5, fig.align='center', cache = TRUE}

load("assets/crps_learning/overview_data.rds")

data %>%

ggplot(aes(x = Date, y = value)) +

geom_line(size = 1, col = cols[9,"blue"]) +

theme_minimal() +

ylab("Value") +

facet_wrap(. ~ name, scales = "free", ncol = 1) +

theme(

text = element_text(size = 15),

strip.background = element_blank(),

strip.text.x = element_blank()

) -> p1

data %>%

ggplot(aes(x = value)) +

geom_histogram(aes(y = ..density..), size = 1, fill = cols[9,"blue"], bins = 50) +

ylab("Density") +

xlab("Value") +

theme_minimal() +

theme(

strip.background = element_rect(fill = cols[3,"grey"], colour = cols[3,"grey"]),

text = element_text(size = text_size)

) +

facet_wrap(. ~ name, scales = "free", ncol = 1, strip.position = "right") -> p2

overview <- cowplot::plot_grid(plotlist = list(p1, p2), align = "hv", axis = "tblr", rel_widths = c(0.65, 0.35))

overview

```

:::

::::

## Experts

::: {style="font-size: 90%;"}

Simple exponential smoothing with additive errors (**ETS-ANN**):

\begin{align*}

Y_{t} = l_{t-1} + \varepsilon_t \quad \text{with} \quad l_t = l_{t-1} + \alpha \varepsilon_t \quad \text{and} \quad \varepsilon_t \sim \mathcal{N}(0,\sigma^2)

\end{align*}

Quantile regression (**QuantReg**): For each $p \in \mathcal{P}$ we assume:

\begin{align*}

F^{-1}_{Y_t}(p) = \beta_{p,0} + \beta_{p,1} Y_{t-1} + \beta_{p,2} |Y_{t-1}-Y_{t-2}|

\end{align*}

ARIMA(1,0,1)-GARCH(1,1) with Gaussian errors (**ARMA-GARCH**):

\begin{align*}

Y_{t} = \mu + \phi(Y_{t-1}-\mu) + \theta \varepsilon_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \sigma_t^2 = \omega + \alpha \varepsilon_{t-1}^2 + \beta \sigma_{t-1}^2 \quad \text{and} \quad Z_t \sim \mathcal{N}(0,1)

\end{align*}

ARIMA(0,1,0)-I-EGARCH(1,1) with Gaussian errors (**I-EGARCH**):

\begin{align*}

Y_{t} = \mu + Y_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \log(\sigma_t^2) = \omega + \alpha Z_{t-1}+ \gamma (|Z_{t-1}|-\mathbb{E}|Z_{t-1}|) + \beta \log(\sigma_{t-1}^2) \quad \text{and} \quad Z_t \sim \mathcal{N}(0,1)

\end{align*}

ARIMA(0,1,0)-GARCH(1,1) with student-t errors (**I-GARCHt**):

\begin{align*}

Y_{t} = \mu + Y_{t-1} + \varepsilon_t \quad \text{with} \quad \varepsilon_t = \sigma_t Z, \quad \sigma_t^2 = \omega + \alpha \varepsilon_{t-1}^2 + \beta \sigma_{t-1}^2 \quad \text{and} \quad Z_t \sim t(0,1, \nu)

\end{align*}

::::

## Results

::: {.panel-tabset}

## Significance

```{r, echo = FALSE, fig.width=7, fig.height=5.5, fig.align='center', cache = TRUE, results='asis'}

load("assets/crps_learning/bernstein_application_study_estimations+learnings_rev1.RData")

quantile_loss <- function(X, y, tau) {

t(t(y - X) * tau) * (y - X > 0) + t(t(X - y) * (1 - tau)) * (y - X < 0)

}

QL <- FCSTN * NA

for (k in 1:dim(QL)[1]) {

QL[k, , ] <- quantile_loss(FCSTN[k, , ], as.numeric(yoos), Qgrid)

}

## TABLE AREA

KK <- length(mnames)

TTinit <- 1 ## without first, as all comb. are uniform

RQL <- apply(QL[1:KK, -c(1:TTinit), ], c(1, 3), mean)

dimnames(RQL) <- list(mnames, Qgrid)

RQLm <- apply(RQL, c(1), mean, na.rm = TRUE)

##

qq <- apply(QL[1:KK, -c(1:TTinit), ], c(1, 2), mean)

library(xtable)

Pall <- numeric(KK)

for (i in 1:KK) Pall[i] <- t.test(qq[K + 1, ] - qq[i, ], alternative = "greater")$p.val

Mall <- (RQLm - RQLm[K + 1]) * 10000

Mout <- matrix(Mall[-c(1:(K + 3))], 5, 6)

dimnames(Mout) <- list(moname, mtname)

Pallout <- format(round(Pall, 3), nsmall = 3)

Pallout[Pallout == "0.000"] <- "<.001"

Pallout[Pallout == "1.000"] <- ">.999"

MO <- K

IDX <- c(1:K)

OUT <- t(Mall[IDX])

OUT.num <- OUT

class(OUT.num) <- "numeric"

xxx <- OUT.num

xxxx <- OUT

table <- round(OUT, 3)

table_col <- OUT

i.p <- 1

for (i.p in 1:MO) {

xmax <- -min(Mall) * 5 # max(Mall)

xmin <- min(Mall)

cred <- rev(c(1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, .8, .5)) # , .5,0,0,0,1,1,1) ## red

cgreen <- rev(c(.5, .5, .55, .6, .65, .7, .75, .8, .85, .9, .95, 1, 1, .9)) # , .5,0,1,1,1,0,0) ## green

cblue <- rev(c(.55, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5)) # , .5,1,1,0,0,0,1) ## blue

crange <- c(xmin, xmax) ## range

## colors in plot:

fred <- round(approxfun(seq(crange[1], crange[2], length = length(cred)), cred)(pmin(xxx[, i.p], xmax)), 3)

fgreen <- round(approxfun(seq(crange[1], crange[2], length = length(cgreen)), cgreen)(pmin(xxx[, i.p], xmax)), 3)

fblue <- round(approxfun(seq(crange[1], crange[2], length = length(cblue)), cblue)(pmin(xxx[, i.p], xmax)), 3)

tmp <- format(round(xxx[, i.p], 3), nsmall = 3)

xxxx[, i.p] <- paste("\\cellcolor[rgb]{", fred, ",", fgreen, ",", fblue, "}", tmp, " {\\footnotesize (", Pallout[IDX[i.p]], ")}", sep = "")

table_col[, i.p] <- rgb(fred, fgreen, fblue, maxColorValue = 1)

table[, i.p] <- paste0(

table[, i.p],

'(', Pallout[i.p], ")"

)

}

table_out <- kbl(

table,

align = rep("c", ncol(table)),

bootstrap_options = c("condensed"),

escape = FALSE) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:nrow(table), color = cols[9, "grey"])

for (j in 1:ncol(table)) {

table_out <- table_out %>%

column_spec(j, background = table_col[, j])

}

table_out

```

```{r, echo = FALSE, fig.width=7, fig.height=5.5, fig.align='center', cache = TRUE, results='asis'}

MO <- 6

OUT <- Mout

OUT.num <- OUT

class(OUT.num) <- "numeric"

xxx <- OUT.num

xxxx <- OUT

i.p <- 1

table2 <- OUT

table_col2 <- OUT

for (i.p in 1:MO) {

xmax <- -min(Mall) * 5 # max(Mall)

xmin <- min(Mall)

cred <- rev(c(1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, .8, .5)) # , .5,0,0,0,1,1,1) ## red

cgreen <- rev(c(.5, .5, .55, .6, .65, .7, .75, .8, .85, .9, .95, 1, 1, .9)) # , .5,0,1,1,1,0,0) ## green

cblue <- rev(c(.55, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5, .5)) # , .5,1,1,0,0,0,1) ## blue

crange <- c(xmin, xmax) ## range

## colors in plot:

fred <- round(approxfun(seq(crange[1], crange[2], length = length(cred)), cred)(pmin(xxx[, i.p], xmax)), 3)

fgreen <- round(approxfun(seq(crange[1], crange[2], length = length(cgreen)), cgreen)(pmin(xxx[, i.p], xmax)), 3)

fblue <- round(approxfun(seq(crange[1], crange[2], length = length(cblue)), cblue)(pmin(xxx[, i.p], xmax)), 3)

tmp <- format(round(xxx[, i.p], 3), nsmall = 3)

xxxx[, i.p] <- paste("\\cellcolor[rgb]{", fred, ",", fgreen, ",", fblue, "}", tmp, " {\\footnotesize (", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")}", sep = "")

# table2[, i.p] <- paste0(tmp, " (", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")")

table2[, i.p] <- paste0(

tmp,

"(", Pallout[K + 3 + 5 * (i.p - 1) + 1:5], ")"

)

table_col2[, i.p] <- rgb(fred, fgreen, fblue, maxColorValue = 1)

} # i.p

rownames(table2) <- c("Pointwise", "B-Smooth", "P-Smooth", "B-Constant", "P-Constant")

rownames(table_col2) <- rownames(table2)

table2["B-Smooth", c("QRlin", "QRconv")] <- "-"

table_col2["B-Smooth", c("QRlin", "QRconv")] <- cols[2, "grey"]

idx <- c("Pointwise", "B-Constant", "P-Constant", "B-Smooth", "P-Smooth")

table2["P-Smooth", "BOAG"] <- "-0.182(0.039)"

table_out2 <- kableExtra::kbl(

table2[idx, ],

align = rep("c", ncol(table2)),

bootstrap_options = c("condensed"),

escape = FALSE

) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:nrow(table2[idx, ]), color = cols[9, "grey"])

for (j in seq_len(ncol(table2[idx, ]))) {

table_out2 <- table_out2 %>%

column_spec(1 + j,

background = table_col2[idx, j]

)

}

table_out2 %>%

column_spec(1, bold = T)

```

CRPS difference to Naive. Negative values correspond to better performance (the best value is bold).

Additionally, we show the p-value of the DM-test, testing against Naive. The cells are colored with respect to their values (the greener better).

## QL

```{r, echo = FALSE, fig.width=13, fig.height=5.5, fig.align='center', cache = TRUE}

##### Performance across probabilities

M <- length(mnames)

Msel <- c(1:K, K + 1, K + 1 + 2 + 1:4 * 5 - 2) ## experts + naive + smooth

modnames <- mnames[Msel]

tCOL <- c(

"#E6CC00", "#CC6600", "#E61A1A", "#99004D", "#F233BF",

"#666666", "#0000CC", "#1A80E6", "#1AE680", "#00CC00"

)

t(RQL) %>%

as_tibble() %>%

select(Naive) %>%

mutate(Naive = 0) %>%

mutate(p = 1:99 / 100) %>%

pivot_longer(-p, values_to = "Loss differences") -> dummy

t(RQL) %>%

as_tibble() %>%

select(mnames[Msel]) %>%

mutate(p = 1:99 / 100) %>%

pivot_longer(!p & !Naive) %>%

mutate(`Loss differences` = value - Naive) %>%

select(-value, -Naive) %>%

rbind(dummy) %>%

mutate(

p = as.numeric(p),

name = stringr::str_replace(name, "-P-smooth", ""),

name = factor(name, levels = stringr::str_replace(mnames[Msel], "-P-smooth", ""), ordered = T),

`Loss differences` = `Loss differences` * 1000

) %>%

ggplot(aes(x = p, y = `Loss differences`, colour = name)) +

geom_line(linewidth = 1) +

theme_minimal() +

theme(

text = element_text(size = text_size),

legend.position = "bottom"

) +

xlab("Probability p") +

scale_color_manual(NULL, values = tCOL) +

guides(colour = guide_legend(nrow = 2, byrow = TRUE))

```

## Cumulative Loss Difference

```{r, echo = FALSE, fig.width=13, fig.height=5.5, fig.align='center', cache = TRUE}

DQL <- t(apply(apply(QL[1:KK, -c(1:TTinit), ], c(1, 2), mean), 1, cumsum))

rownames(DQL) <- mnames

t(DQL) %>%

as_tibble() %>%

select(Naive) %>%

mutate(

`Difference of cumulative loss` = 0,

Date = ytime[-c(1:(TT + TTinit + 1))],

name = "Naive"

) %>%

select(-Naive) -> dummy

data <- t(DQL) %>%

as_tibble() %>%

select(mnames[Msel]) %>%

mutate(Date = ytime[-c(1:(TT + TTinit + 1))]) %>%

pivot_longer(!Date & !Naive) %>%

mutate(`Difference of cumulative loss` = value - Naive) %>%

select(-value, -Naive) %>%

rbind(dummy) %>%

mutate(

name = stringr::str_replace(name, "-P-smooth", ""),

name = factor(name, levels = stringr::str_replace(mnames[Msel], "-P-smooth", ""))

)

data %>%

ggplot(aes(x = Date, y = `Difference of cumulative loss`, colour = name)) +

geom_line(size = 1) +

theme_minimal() +

theme(

text = element_text(size = text_size),

legend.position = "bottom"

) +

scale_color_manual(NULL, values = tCOL) +

guides(colour = guide_legend(nrow = 2, byrow = TRUE))

```

## Weights (BOAG P-Smooth)

```{r, echo = FALSE, fig.width=13, fig.height=5.5, fig.align='center', cache = TRUE}

load("assets/crps_learning/weights_data.RData")

weights_data %>%

ggplot(aes(Date, p, fill = w)) +

geom_raster(interpolate = TRUE) +

facet_grid(Mod ~ .) +

theme_minimal() +

theme(

plot.margin = unit(c(0.2, 0.2, 0.2, 0.2), "cm"),

text = element_text(size = text_size),

legend.key.height = unit(0.9, "inch")

) +

ylab("p") +

scale_fill_gradientn(

limits = c(0, 1),

colours = colseq,

breaks = seq(0, 1, 0.2)

) +

scale_x_date(expand = c(0, 0))

```

## Weights (Last)

```{r, echo = FALSE, fig.width=13, fig.height=5.5, fig.align='center', cache = TRUE}

load("assets/crps_learning/weights_example.RData")

weights %>%

ggplot(aes(x = p, y = weights, col = Model)) +

geom_line(size = 1.5) +

theme_minimal() +

theme(

plot.margin = unit(c(0.2, 0.3, 0.2, 0.2), "cm"),

text = element_text(size = text_size),

legend.position = "bottom",

legend.title = element_blank(),

panel.spacing = unit(1.5, "lines")

) +

scale_color_manual(NULL, values = tCOL[1:K]) +

facet_grid(. ~ K)

```

::::

::::

# Multivariate Probabilistic CRPS Learning with an Application to Day-Ahead Electricity Prices {visibility="uncounted"}

Berrisch, J., & Ziel, F. (2024). *International Journal of Forecasting*, 40(4), 1568-1586.

## Multivariate CRPS Learning

:::: {.columns}

::: {.column width="45%"}

We extend the **B-Smooth** and **P-Smooth** procedures to the multivariate setting:

::: {.panel-tabset}

## Penalized Smoothing

Let $\boldsymbol{\psi}^{\text{mv}}=(\psi_1,\ldots, \psi_{D})$ and $\boldsymbol{\psi}^{\text{pr}}=(\psi_1,\ldots, \psi_{P})$ be two sets of bounded basis functions on $(0,1)$:

\begin{equation*}

\boldsymbol w_{t,k} = \boldsymbol{\psi}^{\text{mv}} \boldsymbol{b}_{t,k} {\boldsymbol{\psi}^{pr}}'

\end{equation*}

with parameter matix $\boldsymbol b_{t,k}$. The latter is estimated to penalize $L_2$-smoothing which minimizes

\begin{align}

& \| \boldsymbol{\beta}_{t,d, k}' \boldsymbol{\varphi}^{\text{pr}} - \boldsymbol b_{t, d, k}' \boldsymbol{\psi}^{\text{pr}} \|^2_2 + \lambda^{\text{pr}} \| \mathcal{D}_{q} (\boldsymbol b_{t, d, k}' \boldsymbol{\psi}^{\text{pr}}) \|^2_2 + \nonumber \\

& \| \boldsymbol{\beta}_{t, p, k}' \boldsymbol{\varphi}^{\text{mv}} - \boldsymbol b_{t, p, k}' \boldsymbol{\psi}^{\text{mv}} \|^2_2 + \lambda^{\text{mv}} \| \mathcal{D}_{q} (\boldsymbol b_{t, p, k}' \boldsymbol{\psi}^{\text{mv}}) \|^2_2 \nonumber

\end{align}

with differential operator $\mathcal{D}_q$ of order $q$

[{{< fa calculator >}}]{style="color:var(--col_green_10);"} We have an analytical solution.

## Basis Smoothing

Linear combinations of bounded basis functions:

\begin{equation}

\underbrace{\boldsymbol w_{t,k}}_{D \text{ x } P} = \sum_{j=1}^{\widetilde D} \sum_{l=1}^{\widetilde P} \beta_{t,j,l,k} \varphi^{\text{mv}}_{j} \varphi^{\text{pr}}_{l} = \underbrace{\boldsymbol \varphi^{\text{mv}}}_{D\text{ x }\widetilde D} \boldsymbol \beta_{t,k} \underbrace{{\boldsymbol\varphi^{\text{pr}}}'}_{\widetilde P \text{ x }P} \nonumber

\end{equation}

A popular choice: B-Splines

$\boldsymbol \beta_{t,k}$ is calculated using a reduced regret matrix:

$\underbrace{\boldsymbol r_{t,k}}_{\widetilde P \times \widetilde D} = \boldsymbol \varphi^{\text{pr}} \underbrace{\left({\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widetilde{\boldsymbol X}_{t},Y_t)- {\boldsymbol{QL}}_{\mathcal{P}}^{\nabla}(\widehat{\boldsymbol X}_{t},Y_t)\right)}_{\text{PxD}}\boldsymbol \varphi^{\text{mv}}$

If $\widetilde P = P$ it holds that $\boldsymbol \varphi^{pr} = \boldsymbol{I}$ (pointwise)

For $\widetilde P = 1$ we receive constant weights

::::

:::

::: {.column width="2%"}

:::

::: {.column width="53%"}

```{r, fig.align="center", echo=FALSE, out.width = "1000px", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/algorithm.svg")

```

:::

::::

## Application

:::: {.columns}

::: {.column width="48%"}

#### Data

- Day-Ahead electricity price forecasts from @marcjasz2022distributional

- Produced using probabilistic neural networks

- 24-dimensional distributional forecasts

- Distribution assumptions: JSU and Normal

- 8 experts (4 JSU, 4 Normal)

- 27th Dec. 2018 to 31st Dec. 2020 (736 days)

- We extract 99 quantiles (percentiles)

:::

::: {.column width="4%"}

:::

::: {.column width="48%"}

#### Setup

Evaluation: Exclude first 182 observations

Extensions: Penalized smoothing | Forgetting

Tuning strategies:

- Bayesian Fix

- Sophisticated Baesian Search algorithm

- Online

- Dynamic based on past performance

- Bayesian Online

- First Bayesian Fix then Online

Computation Time: ~30 Minutes

:::

::::

## Results

:::: {.columns}

::: {.column width="55%"}

```{r, cache = TRUE}

load("assets/mcrps_learning/naive_table_df.rds")

table_naive <- naive_table_df %>%

as_tibble() %>%

head(1) %>%

mutate_all(round, 4) %>%

mutate_all(sprintf, fmt = "%#.3f") %>%

kbl(

bootstrap_options = "condensed",

escape = FALSE,

format = "html",

booktabs = FALSE,

align = c("c", rep("c", ncol(naive_table_df) - 1))

) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:1, color = cols[9, "grey"]) %>%

kable_styling(font_size = 16)

for (i in 1:ncol(naive_table_df)) {

table_naive <- table_naive %>%

column_spec(i,

background = ifelse(

is.na(naive_table_df["stat", i, drop = TRUE][-ncol(naive_table_df)]),

cols[5, "grey"],

col_scale2(

naive_table_df["stat", i, drop = TRUE][-ncol(naive_table_df)],

rng_t

)

),

bold = i == which.min(naive_table_df["loss", ])

)

}

table_naive

load("assets/mcrps_learning/performance_data.rds")

i <- 1

j <- 1

for (j in 1:3) {

for (i in seq_len(nrow(performance_loss_tibble))) {

if (loss_and_dm[i, j, "p.val"] < 0.001) {

performance_loss_tibble[i, 2 + j] <- paste0(

performance_loss_tibble[i, 2 + j],

'***'

)

} else if (loss_and_dm[i, j, "p.val"] < 0.01) {

performance_loss_tibble[i, 2 + j] <- paste0(

performance_loss_tibble[i, 2 + j],

'**'

)

} else if (loss_and_dm[i, j, "p.val"] < 0.05) {

performance_loss_tibble[i, 2 + j] <- paste0(

performance_loss_tibble[i, 2 + j],

'*'

)

} else if (loss_and_dm[i, j, "p.val"] < 0.1) {

performance_loss_tibble[i, 2 + j] <- paste0(

performance_loss_tibble[i, 2 + j],

'.'

)

} else {

performance_loss_tibble[i, 2 + j] <- paste0(

performance_loss_tibble[i, 2 + j]

)

}

}

}

table_performance <- performance_loss_tibble %>%

kbl(

padding=-1L,

col.names = c(

'Description',

'Parameter Tuning',

'BOA',

'ML-Poly',

'EWA'

),

bootstrap_options = "condensed",

# Dont replace any string, dataframe has to be valid latex code ...

escape = FALSE,

format = "html",

align = c("l", "l", rep("c", ncol(performance_loss_tibble)-2))

) %>%

kable_paper(full_width = TRUE) %>%

row_spec(0:nrow(performance_loss_tibble), color = cols[9, "grey"])

# %%

for (i in 3:ncol(performance_loss_tibble)) {

bold_cells <- rep(FALSE, times = nrow(performance_loss_tibble))

loss <- loss_and_dm[, i - 2, "loss"]

table_performance <- table_performance %>%

column_spec(i,

background = c(

col_scale2(

loss_and_dm[, i - 2, "stat"],

rng_t

)

),

bold = loss == min(loss),

)

}

table_performance %>%

kable_styling(font_size = 16)

```

```{=html}

Coloring w.r.t. test statistic:

<-5

-4

-3

-2

-1

0

1

2

3

4

>5

Significance denoted by: . p < 0.1; * p < 0.05; ** p < 0.01; *** p < 0.001;

```

:::

::: {.column width = "45%"}

::: {.panel-tabset}

## Constant

```{r, fig.align="center", echo=FALSE, out.width = "400", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/constant.svg")

```

## Pointwise

```{r, fig.align="center", echo=FALSE, out.width = "400", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/pointwise.svg")

```

## B Constant PR

```{r, fig.align="center", echo=FALSE, out.width = "400", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/constant_pr.svg")

```

## B Constant MV

```{r, fig.align="center", echo=FALSE, out.width = "400", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/constant_mv.svg")

```

## Smooth.Forget

```{r, fig.align="center", echo=FALSE, out.width = "400", cache = TRUE}

knitr::include_graphics("assets/mcrps_learning/smooth_best.svg")

```

::::

:::

::::

## Results

::: {.panel-tabset}

## Chosen Parameters

```{r, warning=FALSE, fig.align="center", echo=FALSE, fig.width=12, fig.height=5.5, cache = TRUE}

load("assets/mcrps_learning/pars_data.rds")

pars_data %>%

ggplot(aes(x = dates, y = value)) +

geom_rect(aes(

ymin = 0,

ymax = value * 1.2,

xmin = dates[1],

xmax = dates[182],

fill = "Burn-In"

)) +

geom_line(aes(color = name), linewidth = linesize, show.legend = FALSE) +

scale_colour_manual(

values = as.character(cols[5, c("pink", "amber", "green")])

) +

facet_grid(name ~ .,

scales = "free_y",

# switch = "both"

) +

scale_y_continuous(

trans = "log2",

labels = scaleFUN

) +

theme_minimal() +

theme(

# plot.margin = unit(c(0.2, 0.2, 0.2, 0.2), "cm"),

text = element_text(size = text_size),

legend.key.width = unit(0.9, "inch"),

legend.position = "none"

) +

ylab(NULL) +

xlab("date") +

scale_fill_manual(NULL,

values = as.character(cols[3, "grey"])

)

```

## Weights: Hour 16:00-17:00

```{r, fig.align="center", echo=FALSE, fig.width=12, fig.height=5.5, cache = TRUE}

load("assets/mcrps_learning/weights_h.rds")

weights_h %>%

ggplot(aes(date, q, fill = weight)) +

geom_raster(interpolate = TRUE) +

facet_grid(

Expert ~ . # , labeller = labeller(Mod = mod_labs)

) +

theme_minimal() +

theme(

# plot.margin = unit(c(0.2, 0.2, 0.2, 0.2), "cm"),

text = element_text(size = text_size),

legend.key.height = unit(0.9, "inch")

) +

scale_x_date(expand = c(0, 0)) +

scale_fill_gradientn(

oob = scales::squish,

limits = c(0, 1),

values = c(seq(0, 0.4, length.out = 8), 0.65, 1),

colours = c(

cols[8, "red"],

cols[5, "deep-orange"],

cols[5, "amber"],

cols[5, "yellow"],

cols[5, "lime"],

cols[5, "light-green"],

cols[5, "green"],

cols[7, "green"],

cols[9, "green"],

cols[10, "green"]

),

breaks = seq(0, 1, 0.1)

) +

xlab("date") +

ylab("probability") +

scale_y_continuous(breaks = c(0.1, 0.5, 0.9))

```

## Weights: Median

```{r, fig.align="center", echo=FALSE, fig.width=12, fig.height=5.5, cache = TRUE}

load("assets/mcrps_learning/weights_q.rds")

weights_q %>%

mutate(hour = as.numeric(hour) - 1) %>%

ggplot(aes(date, hour, fill = weight)) +

geom_raster(interpolate = TRUE) +

facet_grid(

Expert ~ . # , labeller = labeller(Mod = mod_labs)

) +

theme_minimal() +

theme(

# plot.margin = unit(c(0.2, 0.2, 0.2, 0.2), "cm"),

text = element_text(size = text_size),

legend.key.height = unit(0.9, "inch")

) +

scale_x_date(expand = c(0, 0)) +

scale_fill_gradientn(

oob = scales::squish,

limits = c(0, 1),

values = c(seq(0, 0.4, length.out = 8), 0.65, 1),

colours = c(

cols[8, "red"],

cols[5, "deep-orange"],

cols[5, "amber"],

cols[5, "yellow"],

cols[5, "lime"],

cols[5, "light-green"],

cols[5, "green"],

cols[7, "green"],

cols[9, "green"],

cols[10, "green"]

),

breaks = seq(0, 1, 0.1)

) +

xlab("date") +

ylab("hour") +

scale_y_continuous(breaks = c(0, 8, 16, 24))

```

::::

## Non-Equidistant Knots

::: {.panel-tabset}

## Knot Placement Illustration

```{ojs}

bsplineData = FileAttachment("assets/mcrps_learning/basis_functions.csv").csv({ typed: true })

```

```{ojs}

// Defined above

// function updateChartInner(g, x, y, linesGroup, color, line, data) {

// // Update axes with transitions

// x.domain([0, d3.max(data, d => d.x)]);

// g.select(".x-axis").transition().duration(1500).call(d3.axisBottom(x).ticks(10));

// y.domain([0, d3.max(data, d => d.y)]);

// g.select(".y-axis").transition().duration(1500).call(d3.axisLeft(y).ticks(5));

// // Group data by basis function

// const dataByFunction = Array.from(d3.group(data, d => d.b));

// const keyFn = d => d[0];

// // Update basis function lines

// const u = linesGroup.selectAll("path").data(dataByFunction, keyFn);

// u.join(

// enter => enter.append("path").attr("fill","none").attr("stroke-width",3)

// .attr("stroke", (_, i) => color(i)).attr("d", d => line(d[1].map(pt => ({x: pt.x, y: 0}))))

// .style("opacity",0),

// update => update,

// exit => exit.transition().duration(1000).style("opacity",0).remove()

// )

// .transition().duration(1000)

// .attr("d", d => line(d[1]))

// .attr("stroke", (_, i) => color(i))

// .style("opacity",1);

// }

chart0 = {

// State variables for selected parameters

let selectedMu = 0.5;

let selectedSig = 1;

let selectedNonc = 0;

let selectedTailw = 1;

const filteredData = () => bsplineData.filter(d =>

Math.abs(selectedMu - d.mu) < 0.001 &&

d.sig === selectedSig &&

d.nonc === selectedNonc &&

d.tailw === selectedTailw

);

const container = d3.create("div")

.style("max-width", "none")

.style("width", "100%");;

const controlsContainer = container.append("div")

.style("display", "flex")

.style("gap", "20px");

// slider controls

const sliders = [

{ label: 'Mu', get: () => selectedMu, set: v => selectedMu = v, min: 0.1, max: 0.9, step: 0.2 },

{ label: 'Sigma', get: () => Math.log2(selectedSig), set: v => selectedSig = 2 ** v, min: -2, max: 2, step: 1 },

{ label: 'Noncentrality', get: () => selectedNonc, set: v => selectedNonc = v, min: -4, max: 4, step: 2 },

{ label: 'Tailweight', get: () => Math.log2(selectedTailw), set: v => selectedTailw = 2 ** v, min: -2, max: 2, step: 1 }

];

// Build slider controls with D3 data join

const sliderCont = controlsContainer.selectAll('div').data(sliders).join('div')

.style('display','flex').style('align-items','center').style('gap','10px')

.style('flex','1').style('min-width','0px');

sliderCont.append('label').text(d => d.label + ':').style('font-size','20px');

sliderCont.append('input')

.attr('type','range').attr('min', d => d.min).attr('max', d => d.max).attr('step', d => d.step)

.property('value', d => d.get())

.on('input', function(event, d) {

const val = +this.value; d.set(val);

d3.select(this.parentNode).select('span').text(d.label.match(/Sigma|Tailweight/) ? 2**val : val);

updateChart(filteredData());

})

.style('width', '100%');

sliderCont.append('span').text(d => (d.label.match(/Sigma|Tailweight/) ? d.get() : d.get()))

.style('font-size','20px');

// Add Reset button to clear all sliders to their defaults

controlsContainer.append('button')

.text('Reset')

.style('font-size', '20px')

.style('align-self', 'center')

.style('margin-left', 'auto')

.on('click', () => {

// reset state vars

selectedMu = 0.5;

selectedSig = 1;

selectedNonc = 0;

selectedTailw = 1;

// update input positions

sliderCont.selectAll('input').property('value', d => d.get());

// update displayed labels

sliderCont.selectAll('span')

.text(d => d.label.match(/Sigma|Tailweight/) ? (2**d.get()) : d.get());

// redraw chart

updateChart(filteredData());

});

// Build SVG

const width = 1200;

const height = 450;

const margin = {top: 40, right: 20, bottom: 40, left: 40};

const innerWidth = width - margin.left - margin.right;

const innerHeight = height - margin.top - margin.bottom;

// Set controls container width to match SVG plot width

controlsContainer.style("max-width", "none").style("width", "100%");

// Distribute each control evenly and make sliders full-width

controlsContainer.selectAll("div").style("flex", "1").style("min-width", "0px");

controlsContainer.selectAll("input").style("width", "100%").style("box-sizing", "border-box");

// Create scales

const x = d3.scaleLinear()

.domain([0, 1])

.range([0, innerWidth]);

const y = d3.scaleLinear()

.domain([0, 1])

.range([innerHeight, 0]);

// Create a color scale for the basis functions

const color = d3.scaleOrdinal(d3.schemeCategory10);

// Create SVG

const svg = d3.create("svg")

.attr("width", "100%")

.attr("height", "auto")

.attr("viewBox", [0, 0, width, height])

.attr("preserveAspectRatio", "xMidYMid meet")

.attr("style", "max-width: 100%; height: auto;");

// Create the chart group

const g = svg.append("g")

.attr("transform", `translate(${margin.left},${margin.top})`);

// Add axes

const xAxis = g.append("g")

.attr("transform", `translate(0,${innerHeight})`)

.attr("class", "x-axis")

.call(d3.axisBottom(x).ticks(10))

.style("font-size", "20px");

const yAxis = g.append("g")

.attr("class", "y-axis")

.call(d3.axisLeft(y).ticks(5))

.style("font-size", "20px");

// Add a horizontal line at y = 0

g.append("line")

.attr("x1", 0)

.attr("x2", innerWidth)

.attr("y1", y(0))

.attr("y2", y(0))

.attr("stroke", "#000")

.attr("stroke-opacity", 0.2);

// Add gridlines

g.append("g")

.attr("class", "grid-lines")

.selectAll("line")

.data(y.ticks(5))

.join("line")

.attr("x1", 0)

.attr("x2", innerWidth)

.attr("y1", d => y(d))

.attr("y2", d => y(d))

.attr("stroke", "#ccc")

.attr("stroke-opacity", 0.5);

// Create a line generator

const line = d3.line()

.x(d => x(d.x))

.y(d => y(d.y))

.curve(d3.curveBasis);

// Group to contain the basis function lines

const linesGroup = g.append("g")

.attr("class", "basis-functions");